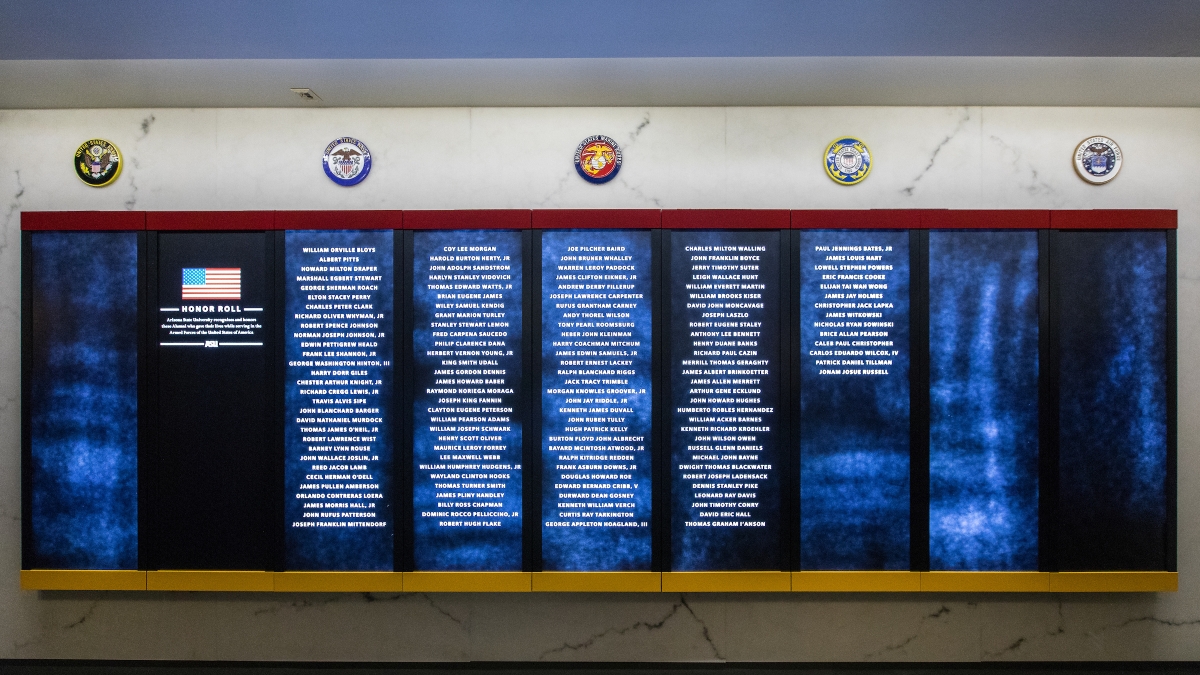

Howard Draper held his hand up to the wall of 134 names, next to his uncle’s name, which he shares.

“This is the kind of thing he would have really loved,” Draper said of his uncle, who was killed on the Aisne Plateau in northern France in 1918. He was 21.

Draper and 133 other Sun Devils who gave their lives for their country were honored permanently when the Arizona State University Memorial to Fallen Alumni was dedicated today.

They died in places like Caballo Island in the Pacific, Takoradi in British West Africa, Kon Tum in Vietnam, Fallujah in Iraq and Soltan Kheyl in Afghanistan. While they were killed on the far sides of the world, what they had in common with each other, and us, is that they walked over the lawns and under the palms of ASU.

Howard Draper and his son Jeff attended from Modesto, California. They were among eight families at the dedication who had a son, brother, uncle or father with a name on the wall.

Draper said his uncle would have been tickled “that they would be doing something like this.”

He speculated that Howard might have gone into politics, had he survived. He wrote patriotic essays and jumped at the chance to enlist. In Arizona, he was remembered well after the war, placed with Frank Luke in the Grand Canyon State’s pantheon of military heroes and cited in speeches.

“I just wonder what he could have done,” Draper said.

His great-nephew Jeff Draper glanced at the wall, which is just outside the Pat Tillman Veterans Center in the Memorial Union. “I kind of wish his name wasn’t up there,” he said.

Patrick Kenney, dean of the College of Liberal Arts and Sciences, spoke at the dedication.

“The Memorial Union bears that name for a reason, and this memorial is a testament to that,” Kenney said.

Kenney’s father was a tail gunner in a B-24 over the Pacific during World War Two. Kenney grew hearing stories about "The War," as it was always referred to by the Greatest Generation, the Baby Boomers and Generation X. He was also an altar boy in the 1960s, serving at mass for three soldiers from his home town who were killed in Vietnam

“It was a very small parish, so everyone knew each other,” Kenny said. “Those moments are seared into me.”

Retired Navy Capt. Steven Borden, director of the Pat Tillman Veterans Center, pointed out that some of the people honored on the wall went through ASU’s ROTC program.

“It is a stark reminder of individuals who have left this institution and endeavoured to make a difference,” Borden said.

Support for the Memorial to Fallen Alumni was raised, in part, by the ASU Alumni Association Veterans Chapter and donors to PitchFunder, the ASU Foundation-led crowdfunding program designed to empower the ASU community to raise funds for projects, events and efforts that make a difference locally and across the globe.

Top photo: The Veterans Memorial Wall is located near the Pat Tillman Veterans Center in the Memorial Union, Friday, Nov. 3. The wall is dedicated to the 134 Sun Devils who made the greatest sacrifice for their country. Photo by Charlie Leight/ASU

More Sun Devil community

ASU writing project team sees 6 instructors in a row win Teaching Excellence Awards

Teaching is difficult work — doing it well, even more so. But instructors at the Embryo Project seem to have figured it out: Baylee Edwards, a PhD candidate in Arizona State University's Biology and…

ASU’s all-treble a capella group closes year with spring concert

By Stella Speridon The ASU all-treble a cappella group Pitchforks held its last concert of the year at the Hackett House on Thursday evening. Founded in 1992, the Pitchforks were the first a…

A big move leads to even bigger opportunity for ASU grad

Moving, no matter the distance, can be a big undertaking — but moving to another country? That's life changing. Bilha Obaigwa made that life-changing leap in 2019 when she immigrated to the United…